Please click here to see the most recent update.

UPDATE 4/28/17 11:45 a.m. MNT: We have 0 calls in queue on our phone line, and are working through about 80 tickets related to the False Positive repair utility. A good portion of those are simply awaiting customer verification.

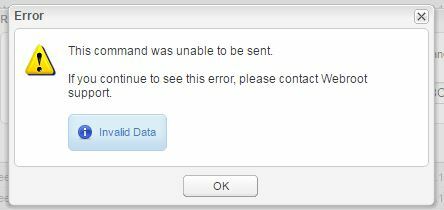

Please note, the utility was built to address only this specific false positive issue. It will be deactivated in the future.

If applications are operating normally on your systems, you do not need to implement the utility.

If you haven’t yet submitted a support ticket and you need the repair utility, please do so here. Include your phone number as well with the support ticket.

Thank you.

Best answer by freydrew

View original